Our marketing attribution philosophy is: Attribution Should Be Practical. Starting today, let’s extend this philosophy to everything you do in measurement and analytics. Every analysis should be practical.

I received a request to blog about the wake many martech platforms leave. Discretion being the better part of valor, I’ll share some stories, but keep the victims nameless. The point of this post is this: everything we believe about marketing attribution applies to every business analysis your company engages in. Are your analyses practical?

So what makes analytics & data science practical? The core criteria in my mind is action: can your business take the analytics, data science, or measurement and immediate utilize the data to take action in-market? For example:

- Can you immediately increase your purchase order above the manufacturer’s MOQ?

- Can your IT team approve testing a new function on your website?

- Does your marketing chief now know where to invest next month’s budget?

Every analysis starts with good intentions. I don’t think too many businesses design analytics and data science projects with the goal of never using them. So why do so many of these projects end up on the cutting room floor?

Because every analysis output, no matter how sophisticated, will always receive this follow-up question:

“But what about [insert complication here] ?”

— Every prudent business leader

And these “what about” questions, annoying as they are, are often the last line of defense between an incomplete analysis taken as gospel, and the poor decision it was doomed to create. Data scientists and business analysts bemoan the lack of action business leaders take based on hard numbers and analysis they spend many hours perfecting. Should business leaders be more data-driven? Absolutely.

But what data scientists, analysts, measurement and analytics platforms don’t want to admit is that the cutting-room floor remains the best outcome for a large majority of these projects.

Examples I’ve seen from leading measurement & attribution solutions over the years:

- A six-month attribution project for a national product supplier with locations throughout the United States, where the final analysis showed all sales originated from Raleigh, NC.

- A 12-month media mix analysis project for a national multi-channel retailer where the top recommendation was to increase brand paid search advertising investment by 500%.

- An 18-month attribution engagement with a top financial services firm, where analysis postponed because timestamps of when customers opened their mail wasn’t available.

Utility & Handling The Unknown

Even sophisticated, legitimate-looking analyses are far too often fraught with the same weakness that doomed those brutal examples above. An analysis is only as good as it’s ability to handle the question “But what about [insert complication here]?” These complications are known factors in a business or customer process that can’t be accounted for in the datasets, or have no current method for measurement. This means that useful analysis comes not from how your method handles data you have: it’s about how you handle data you don’t have.

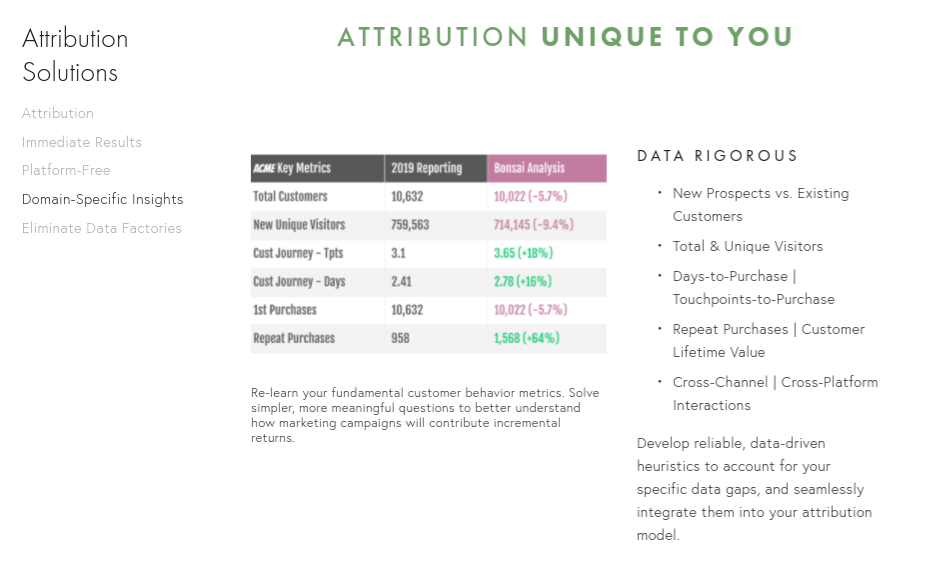

We tout one of the features of our attribution approach as “Domain Specific & Actionable”. Admittedly, our page on this sucks. [Screenshot below]

Domain Specific & Actionable means the capability to integrate specific knowledge, attained only through experience, and apply that knowledge productively to contexts and situations where measurement data remains imperfect or incomplete.

Our methodology leverages your unique qualitative knowledge about your business – your domain expertise – alongside known econometrics, principles & technical realities of modern marketing – Bonsai’s expertise – to always intelligently account for the question: But what about [insert complication]?

Develop reliable, data-driven heuristics to account for your specific data gaps, and seamlessly integrate them into your attribution model. Jargon much? What are we getting at here?

Our solutions are practical, useful and reliable because of our approach to data you don’t have.

So how could a better approach to the unknown have improved the doomed example projects above?

-

A six-month attribution project for a national product supplier with locations throughout the United States, where the final analysis showed all sales originated from Raleigh, NC.

The fix: Business domain experts know they receive sales from locations all throughout the US, so sales data utilized must be incomplete or corrupted. Either way, the analysis should re-evaluate before publishing results.

-

A 12-month measurement & attribution project for a national multi-channel retailer where the top recommendation was to increase brand paid search advertising investment by 500%.

The fix: Paid Search domain experts know that investment comes from existing customer search demand, it can’t be increased through simple budget re-allocation. The analysis should re-evaluate it’s saturation curve methodology and scenario analysis model for digital channels before publishing results.

-

An 18-month attribution engagement with a top financial services firm, where analysis postponed because timestamps of when customers opened their mail wasn’t available.

The fix: While limited-time offers certainly exist, most any adult knows we measure those in days or weeks. We take action on a mailer coupon or offer based on the quality or value of the offer, not the micro-second we happened to go to the mailbox that day. The analysis should re-evaluate it’s customer journey measurement model to reasonably account for temporal factors that matter, while discarding ones that clearly don’t.

So What Should You Do?

You should do two things immediately to improve the utility of analytics, measurement and data science at your organization.

-

Open up an important analysis your organization has created (or paid for) that’s ended up on the cutting room floor. Do you remember the “But what about [complication]?” that doomed or cut short it’s impact? Your data science & analyst teams need time to not just churn out reactive requests and analysis: they should have time to create analysis postmortems (or “debrief” documents) where these questions and issues can be cataloged and brought to the fore when future analyses are proposed.

-

Starting today, before you send out that report to your boss or counterpart, ask yourself the dreaded “But what about [complication]?” question. How are you answering it today? If the answer relies on “when we get better data…” or “this analysis doesn’t take [complication] into account”, don’t send the report yet. Go find a domain expert who can help you. Not every question you receive will be a valid complication – and that’s okay! But you’ll need the help of these advisors and domain experts to guide you through which questions have to be addressed with a thoughtful heuristic, caveat or approach.

And as for our “Domain Specific & Actionable” page — We will keep you posted on how our site updates go. Until then, email us anytime with marketing measurement questions, problems, media & marketing investment decisions you’re business needs a fresh set of eyes on.