Yes, we help companies with media and marketing attribution. Our approach might differ from what you are used to hearing. We don’t do it through our own industry-leading-identity tracking technology. We’re not pioneering a new business metric, calculation, or customer engagement score. Our attribution services begin and end with what we’ve learned from our practical experiences across various industries, and what we know can repeatably reduce marketing waste and increase business outcomes when applied to any business context. It’s probably easiest to explain our approach by sharing a story about the types of issues we see every day.

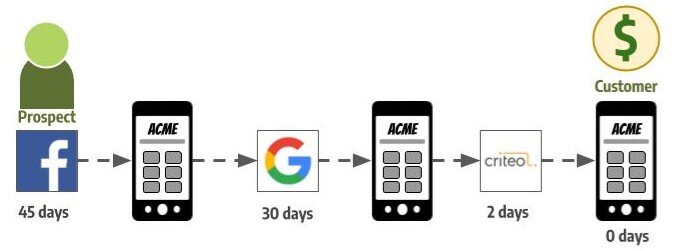

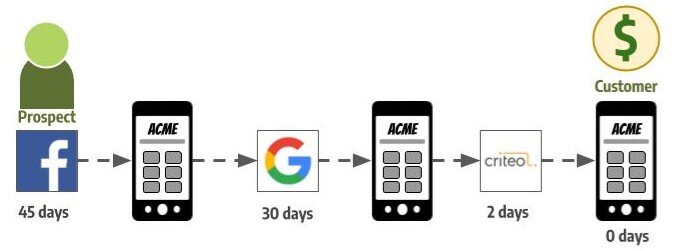

To illustrate, I’ll use my own experience as a father of a young daughter. A typical day might include seeing an ad for a new, color-coded blocks game on Facebook from the company ACME. I’ll think to myself “we should get that for her birthday”. Two weeks of work and family commitments fly by and it will occur to me – “what was that blocks set called again?”

I search, find the product, and save it to my ACME cart. Then duty calls – help with bedtime or dinner prep, and I leave the process once again. Finally, at the end of the month I see an ad for the product while checking for the score of yesterday’s Cubs game. I click through to ACME’s site, and quickly purchase before I forget again.

A typical customer journey for an anonymous retailer – one I’ve lived more times than I care to remember.

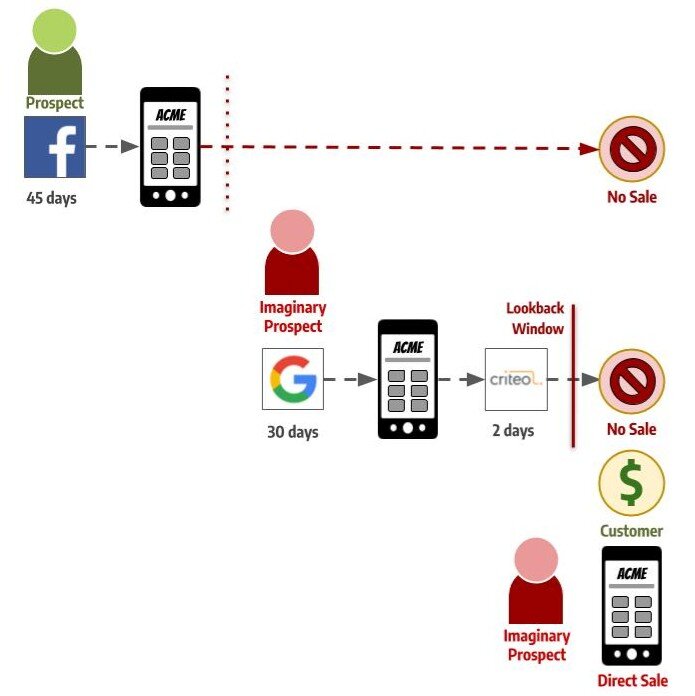

I’m happy with our new blocks set, and ACME’s marketing team reports great success across their Facebook, Google, and Criteo campaigns to ACME’s financial team. The finance group looks at the numbers and sees a problem, however. Marketing data shows three sales where they only see one. “Something’s not right”, the CFO says and executive teams agree. “Marketing is over-credited, and we are wasting dollars on some of our strategies.” An analytics expert amongst them mentions “attribution windows” to ACME’s CFO, and suddenly it’s proclaimed:

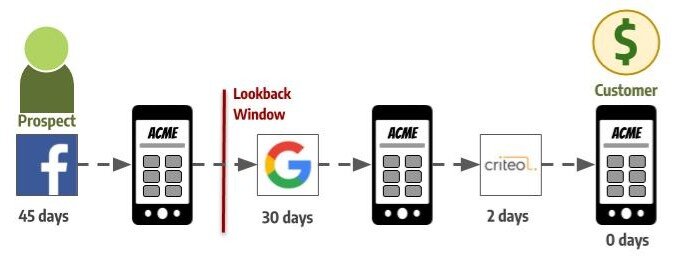

“Sales that come more than 30 days after ad exposure are not attributable to marketing – that’s far too long for media to still have an impact. From now on, we will limit marketing performance reporting to a 30-day lookback window.”

The CFO believes they’ve solved the problem of Marketing’s over-credit, and they’ll recoup wasted dollars.

The next ACME performance marketing reports come back. A few interesting things arise:

-

Traffic to ACME that comes from Facebook no longer gets credit for driving sales. According to the updated attribution, prospects coming to ACME from Facebook aren’t generating any value at all.

-

Massive new prospective customer growth appears from a different channel: Google. Funnily enough, these “new prospects” aren’t new customers at all, they are simply the same customer, counted twice from a different starting line: whatever happened up to 30 days prior to sale.

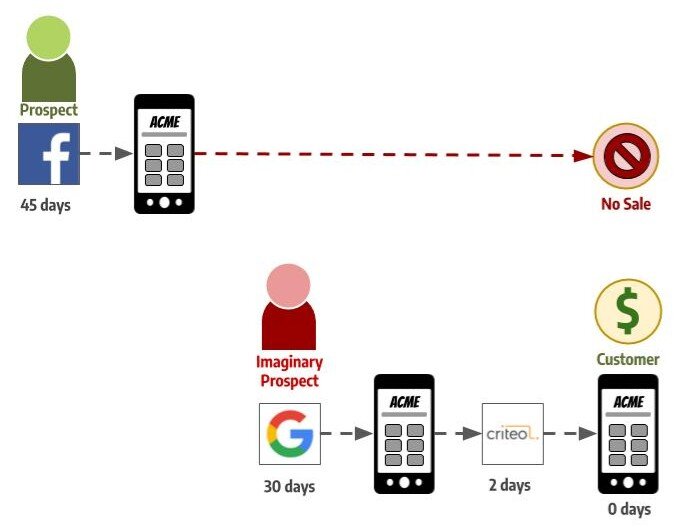

Now, some of Marketing’s proudest initiatives (Facebook campaigns) are seen as completely ineffective. Finance approves strong reduction in expense on Facebook citing: “Customers coming from Facebook we’re not converting into sales – it was wasted expense!”

Despite losing much of her Facebook marketing budget, ACME’s CMO argues that she’s generated massive returns, and shows her data to ACME Finance folks once again. They still do not agree – “This time,” says the CFO, “Marketing reports twice the sales impact we see at the bottom line.” The other business leaders at ACME agree. “We are over-crediting the impact of the marketing organization, and we are wasting dollars because of it.”

A new theory emerges. “Marketing should only get credit for the sales it drives immediately after a click-to-site!” Someone champions from ACME’s Technology team. “All other sales are base sales or due to our promotions”, agrees ACME’s Chief Merchant. The CFO learns that the “attribution window” can be made even smaller.

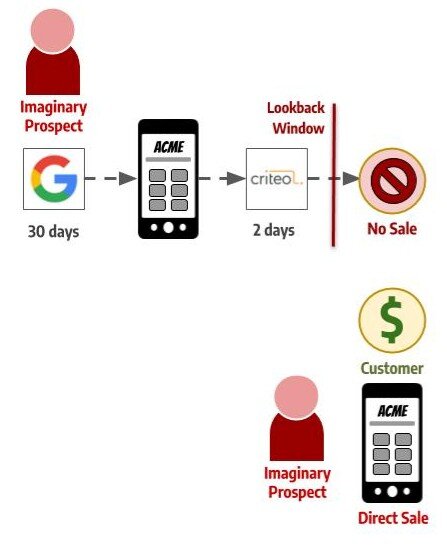

“To better account for the true impact of our marketing investments, we must only report on transactions that occur within 24 hours of an ad interaction with a customer.”

Two more interesting issue emerge at the next business performance meeting:

-

The “new visitor” from Google, that was really a returning visitor initiated from Facebook, also generates “no sales” in the new report. Even the Criteo campaign manager loses credit for generating ACME sales, since those touchpoints occurred 2 days prior to conversion.

-

A pool of direct sales emerge from “new” Direct-to-ACME traffic! In reality these “new prospects” aren’t new customers at all, they are the same customer, now counted three times, followed different durations backward and calling each “the beginning”.

ACMEs incorrectly measured the outcome of the actual customer journey, incorrectly measured the outcome of an imaginary prospective journey, and incorrectly measured a direct-to-ACME customer

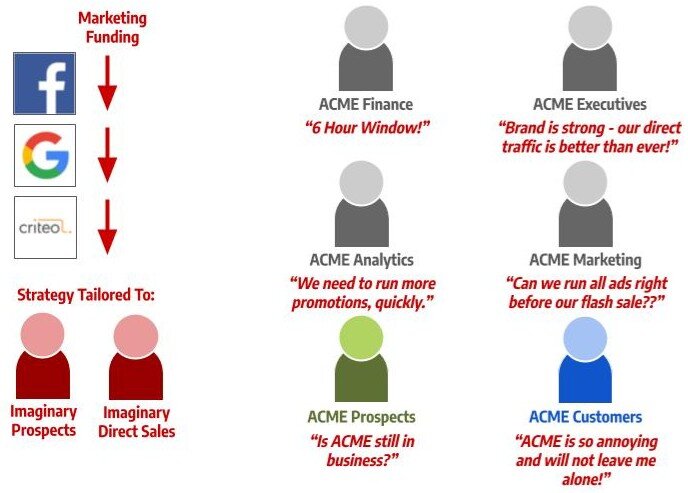

Aggressive “attribution windows” yield the perfect storm: All marketing channels get less credit from Finance, the CFO cuts all marketing funding. The CMO tailors her remaining marketing strategies towards imaginary outcomes. Analytics teams see the only profitable marketing ROIs around promotions and sales, so they encourage the C-suite to approve more price promotions and sales. Executives see direct traffic growth and believe everything is still okay.

Maybe worst of all, ACME’s existing customers are bombarded with ads, so often that they begin to sour on the brand. Meanwhile, prime ACME prospects never hear from them again.

One of the great sins of marketing analytics & attribution platforms – of which there are many – is the implication that by tailoring your “attribution window”, you can achieve measurement and reporting that best represents the true business impact of your marketing investments.

-

An attribution window is a term of art that describes taking measurement data – say the complete digital touchpoint journey of one of your prospective customers over various websites, ad exposures, and interaction types – and intentionally breaking that single journey dataset into multiple inaccurate datasets.

These newly broken datasets are then paired with an implausible attribution model (something like “all sales are due to the very last measurable customer interaction”, commonly known as “last-click”) to try to yield attribution results that better align with a decision-maker’s gut feeling.

Broken data and poor attribution models create three consistent problems at thousands of companies:

-

Marketing initiatives best used to reach new prospects early in their decision journey are de-funded and de-prioritized.

-

Marketing initiatives intended to close the sale are credited with “new customer acquisition” they are incapable of generating. My favorite example comes from companies who report on the “new prospects” their re-marketing campaigns generate every week. Err, What???

-

As money moves towards “converting” last-touch channels, channel managers start trying to find ways to show their ads as close to the time of sale as possible. This results in unnecessary ad spend aimed immediately prior to high-transaction periods, often wasting far more dollars than the CFO was trying to save to begin with. And before you blame the marketers, know that they are only human. Put in the same position, most all of us would try to “catch” credit when a broken attribution model (and datasets) is all we are given.

-

““We don’t make any of those mistakes… we are sophisticated.””

If you are a seasoned marketer, an analytics professional or simply someone deep in the trade you are probably saying, “Yes Matt, we believe you and agree with your comments (mb: you don’t) but you are skipping the most vital issue: measurement needs to be real-time, and since no platform can calculate incremental impact of every touchpoint in real-time, using a last-click attribution with a short attribution window is the most sensible option available.”

It’s true: real-time impact indicators are best for optimizing media spend already in-market, especially across billions of signals, and when ads are programmatically served. The key here, though, is in-market.

Let’s say you’ve committed $100,000 towards digital media strategies, and you want that $100,000 to drive as much value as possible. Would you rather spend on touchpoints where last-click sales can be attributed, versus ones that cannot? Sure. Would you rather spend on touchpoints where “first-touch” conversions can be attributed, versus ones that cannot? Sure. For the most part, every metric you have that is indicative of conversion contribution – whether it be first, last or any touch in-between, from the widest attribution window your tracking technology will allow, is a great indicator of value when compared to the absence of those real-time metrics. No one is going to go broke optimizing their $100,000 in market to the most first, last, and assist conversions as possible. These are perfectly acceptable tools for programmatic bidding and real-time optimization.

But do any of those metrics give you a sense of the true bottom-line incremental impact of that $100,000 after it’s deployed? Should you use that data, or attempt to contrive or slice that data up in arbitrary or convenient ways, to mimic your intuition’s estimation of true marketing ROI and make future investments and media mix decisions based upon it?

“NO. ”

The key here is accepting that measuring marketing ROI and powering media optimization are separate tasks, best tackled with different datasets and processes.

Still, many organizations will see this statement and scoff. Your reaction to the illustrated pitfalls above is “We don’t make any of those mistakes – we are much smarter than that at our organization – we are sophisticated.” Those more bashful might posit: “We see the issues with last-click and agree it’s imperfect, but we are lucky. 85% of our sales occur the same day a prospective customer clicks on our ad.”

To that I’d challenge – really? Do you really know that, or is that simply what your organization’s has always told itself? Which gets me all the way back to the beginning of this story.

If this looks unfamiliar, you are not alone: Very few companies have even looked at their own customer’s actual path to purchase before.

Very few companies have ever actually looked at the high quality customer journey data they have right at their own fingertips. If you use site, app, or asset analytics tools, you most certainly have some yourself. If you track your sales & customer interactions on prem or in your cloud, even better. And while its seductive to believe that your platform’s out-of-the-box reporting will digest all of that data and solve all of your problems for you, remember that almost every one of these out-of-the-box reports is corrupted by attribution windows and arbitrary industry standards put in place because they are computationally convenient at scale, not because of any provable insight or consumer truth that applies to your business.

But that data isn’t perfect, you say. You are right! Even the most rigorous operators have tagging issues. No matter what, every underlying dataset deals with cookie loss. And this isn’t even getting into ad exposure data, which can be entirely missing from these customer journey data altogether. While the quest for a perfect dataset is quixotic, intentionally generating worse data, deploying implausible causal inferences on that data, and expecting great business outcomes is not the answer.

Organizations serious about improving outcomes, growing customer acquisition, retaining current customers, and collaborating across marketing, finance, and analytics teams should embark on three critical actions:

-

Unpack every customer & marketing analytics dataset your collective organization uses and manages: Do you know the scope of each dataset? Do you know how it differs from every other dataset? Finally, you should clearly determine the viable utility of each and every dataset before forcing your teams to rattle off another report, or account for another metric on those reports. Is each dataset fit for use with respect to in-market media optimization? Is it fit for use with respect to measuring the incremental impact on total customer sales or acquisitions, and ultimately, ROI? Datasets are rarely fit for both.

-

Start with your sales data, and work your way back through your marketing & customer touchpoints to see what insights you can collect under a single view. Can you trace product sales to the shopping carts and transactions from which they came? Are you able to account for which customer made which transaction? Are you aware of the touchpoints you’ve had with that customer across your marketing & sales initiatives today, yesterday, and any time prior? There’s no golden key to perfectly unified data across all of your customers, but there are useful data – likely at considerable scale – that represent viable customer journeys with your business if you know how to approach the process.

-

Ask simpler questions of your customer journey data to understand incremental effectiveness of each of your marketing investments and initiatives. Ask these questions more rigorously & repeatably to drive iterative, positive changes to your investment strategy across your entire marketing mix. Leave the three-decimal-precision, intra-day updates to the real-time optimization tools themselves to squeeze as much value out of today’s dollar as possible. Relieve your teams of the obligation to constantly attempt to reconcile these two very different datasets and responsibilities.

To learn more about attribution with Bonsai, visit our new page. If this story resonated with you, or even if you’ve had contrary experiences as a business or a customer, please share it with us so we can learn more.